|

Real Time Decision Support: Creating a Flexible Architecture for Real Time Analyticsby Greg Barnes Nelson and Jeff Wright, |

Key Message:

Data Warehousing + Messaging + Analytics + Delivery (BI) = True Decision Support

Introduction

A vital pillar of leadership is the ability to gather, assess and understand the right data to effectively drive change. Sharing this data with the right people in the right time is equally important.

Enterprise systems and strategic initiatives have become increasingly commonplace for the support of organizational activities. In the quest for more “intelligent” and informed decisions, data warehousing and business intelligence applications are developed, collecting and delivering data to those authorized to receive. The result of much of this effort is a complete infrastructure designed to move data through the enterprise. Technically, this is owed to drip feeds, wipe and load, “slowing changing” dimension management, swim-lanes, parallelization and data optimization – all geek-speak that obscures the fact that data is still 12 hours old.

This paper focuses on the things we can do today to move the right data to the right people, enabling quality, near real-time decisions. Also, we will address when you should drive for real-time decision support and when it might not be appropriate. Finally, we will discuss a framework that supports low cost, incremental improvements in information architecture, while optimizing business processes to ensure information transparency across the enterprise.

When we think of data warehousing, scenes of global architectures, entity-relationship diagrams and teams of programmers all focused on the singular mission – the creation of a massive data store that can answer any and all questions that the enterprise could ask. Instead of thinking about data warehousing as a massive process that involves tools and technologies like ETL processes, massively parallel machines and business intelligence tools abound – we’d like to have you think about data warehousing as a means to help support information delivery, or decision support.

Decision support is not just a tool or a piece of technology to support reporting; instead it is about making sure that people have the right data, just in time. Raw data, transformed results and analytically based conclusions flow through the organization to support our goal of helping people make better decisions – not just those based on “gut”.

Data Warehousing Defined

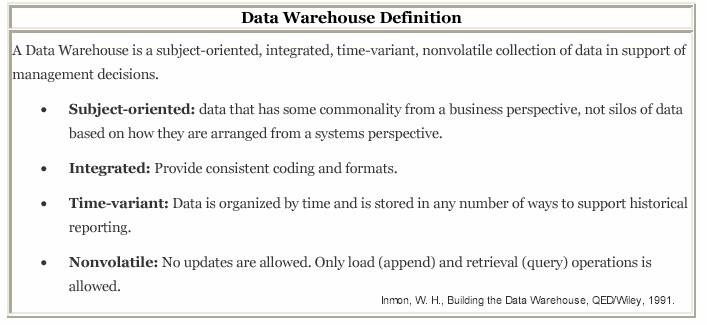

If we look at the history of data warehousing, we will find a rich technological shift in how we think about making data-based decisions – taking data out of the operational systems and giving them their own foundation to support reporting and analysis. When Bill Inmon (1991) first published on the ideas of data warehousing, he suggested some rather specific ideas about what a data warehouse was and provided the following guidelines.

Since the time this original work was published, a number of authors have contributed to the body of knowledge around data warehousing. Since one of the critical “limitations” of Inmon’s work was this idea of non-volatility, subsequent authors – including Inmon himself – concluded that sometime we need something that bridges the gap between the real-time nature of the operational systems and the historical, more strategic perspective of the data warehouse. The Operational Data Store was born out of the idea there needed to be a data structure that was more near the business.

Since the data warehouse began as an architectural response to the silos of operational systems and the challenges that inconsistent and non-integrated data brought, the ODS was the industries’ response to making information more real-time. The data hut, the data mart, operational data store, departmental warehouse, shared data network, corporate information factory and the myriad approaches all focused on information flow in a company. It is all about moving the data from the operational systems through to reporting and analytic applications – and doing that with a high degree of confidence around quality.

Figure 1. Corporate Information Factory

The Real Time Enterprise

The fundamental value of data warehousing is to have a single version of the truth. Bill Inmon, Ralph Kimball, Claudia Imhoff and the thousands of those that followed the data warehousing mantra focused on that as one of the undeniable tenets. Operational systems produce data and reports that change as the business change (as they should). Having a place to look for answers that were consistent and fundamentally “right” was a big deal. Of course, after almost a decade of successes, failures and lots of lessons learned, we think we’ve gotten that story right. Now we want it faster.

Real time data warehousing is a recent industry trend that has caught the attention of industry gurus and IT managers alike. Vendors have attached this opportunity with all of the vigor that we would expect – ETL providors will tell us that it is in the load process where time can be improved; hardware vendors suggest bigger and faster machine; messaging proponents suggest taking the data out of the database and fly through the enterprise with memory-based models. However, the question remains – what are we trying to improve? In our opinion – it is all about improving on “time to decision”.

So what does it mean to have it “faster”? We have been inundated with terms like the zero latency organization, the active data warehouse, real time data warehouse, real time analytics, business activity monitoring, real time personalization and real time business intelligence. White papers, positioning statements, product offerings and letters to the editor have found their way into our inboxes. If the holy grail of software development is reuse, then the corollary to decision support is having the just enough information to make the right decision. To us, real time decision support means “getting the right information, to the right people, just in time.”

We want the data to be good enough with the right level of data quality. We want it on-time, complete and factual. Just as the pendulum swung in the late 1980’s from mainframes to personal computers, we have to temper these real time messages (mostly marketing) with what’s right for our organization. Ultimately, we want good data to support our decisions.

Definition of Real Time

One of the critical challenges of the decision support in general is how quickly can we make sound decisions? The issue really revolves around “time to decision”. The question in the minds of many is what we call this urgency, how do we plan for it and what is the right architecture to get us to that point. As we discussed above, one of the critical components of a data warehouse is that the data is maintained in perpetuity – no rows in the data warehouse are ever modified so as to lose the institutional memory that our data warehouses have offered us. But as we have seen time and time again, data changes. If we look at an example from an on-line trading system a single trade might take on any one of the following characteristics – depending on when we look at the data (even from moment to moment):

The implications are that these various “states” that a single transaction can take is in the interpretation of any “value” of the portfolio. For example, careful examination of the business rules might lead us to very different interpretations of the results if we counted all trades rather than looking at the open trades.

Often the right approach to the volatility of the data requires a huge commitment to understanding the implications of the business – not something that all technology solutions alone have or understand. So when we talk about real time, what kinds of business decisions really need to be made in real time and which are relegated to more of a historical perspective? Sometimes just because we can do something technologically, doesn’t mean we have to. As Regis McKenna points out in his new book, Real Time,

"...almost all technology today is focused on compressing to zero the amount of time it takes to acquire and use information...to make decisions, to initiate action, to deploy resources, to innovate. We have to think and act in real time. We cannot afford to do otherwise."

The real question is how many business problems today can benefit from real time and which can wait until the next morning?

Understanding “Time to Decision”

A critical strategy for any organization is to know what the business need is and let that drive how we use technology to support the business challenge. So where, in our business process, is “time to decision” important? If we are sitting on the web and we click through to a report, we expect that to happen in a timely manner. When we process a credit card transaction, we want that to happen pretty quickly as well. If we run a query on a database asking “who has called in to the customer care center in the last 24 hours?” or “what products have our customers purchased in the past six months?” we have expectations about how long that should take. It seems reasonable that we expect those “transactions” that have one “chunk” of data to be processed should happen much more quickly than those that require lots of chunks. It is this basic assumption that often separates tasks for operational systems with those that require data warehouses.

We see a fundamental shift in the operations of businesses when they combine strategic data with operational data. This shift is seeing its way into some of the most successful companies and their use of technology. For example, a large credit card company uses data from its own databases (account balance, customer name, billing zip code) to process a transaction. In addition, it may augment that data with tertiary sources such as credit scoring models, FICO scores and even neural nets to determine not only whether the transaction should be authorized, but also patterns of historical data and account profiling methods to evaluate risk potential on a single transaction. It is this juncture of operational efficiency (sub-second response time) and strategic use of data in real time that seems so compelling.

Taking data, moving it through a labyrinth of systems, comparing it to historical data points and streaming back enough content to make a decision is suddenly blurring the lines between operational and strategic systems (like data warehouses and business intelligence portals). Getting excited about the possibilities is natural. If we can trade commodities in real time, or determine rail car locations using GPS and reroute them in “live time,” that gives us confidence that data can be used for lots of other things that are not only cool, but practical.

Of course, our excitement is often tempered with the sad fact that we often cannot get data out of the operational and strategic systems that hold them captive. Try getting a new report from our IT department – and your unbridled enthusiasm is quickly dashed against the rocks of hopelessness. Why does it take so long to get a report on our customers when we see individual bits of data flying around our message buses en masse? The dismal performance of our databases has left us with the feeling that real time is laughable.

Organizational Change

Many authors have talked about the real time enterprise and managing expectations about the hype. Getting inventory results every 10 minutes is laughable, says Neil Raden (Raden, 2003), when the trucks don’t leave the warehouse but once a day. So determining what kinds of business decisions can be supported by real time data systems is the key. More importantly, our ability to process the kinds of information that goes into decisions can be hampered by fundamental business processes. For example, it may be useful to have an on-line system to know where the financial state of your company is at a point in time. Having a real time data feed that highlights major expenditures may be helpful, but usually not without knowing where you are relative to your revenue targets and whether or not the expense was anticipated. Further, gaining a (false) sense of security around your financial well-being is not advised when the people that support the data getting into the system process expenses only at month end.

The ability to get information real time and the organization’s capacity to support those decisions at the same pace has more to do with operational preparedness and prioritizing those things that bring significant value to the company.

Components of a Real Time Data Warehouse

The Enterprise Data Architecture

Instead of seeing real time technologies as an all-or-nothing proposition, it might be helpful to think about our systems as an integrated architecture – the information architecture. As architects, our focus is to help companies figure out what bits of information can be useful by themselves and which need the perspective of history or advanced analytics. If we view the integration of data throughout our enterprise as an elaborate chain of connectedness to our business, it becomes easier to understand where real time fits into the “information architecture”.

Figure 2. Operational and Strategic Data Used in Decision Making

As we outlined above, data warehousing is really about the process of creating, populating and querying an information store with useful content about things that are important to the enterprise. Ralph Kimball defines a data warehouse as "a copy of transaction data specifically structured for query and analysis."

By defining the right structure of the data in a persistent store, we can populate the database by using ETL (extraction-transformation-loading) processes that pull data from the OLTP (on-line transaction processing) systems into the data warehouse. Finally, our ability to analyze and report on this data completes the information architecture – our strategy for deriving information from data.

Before examining the components that make up a real time data warehouse, let's revisit the motivation for data warehouses. If the organization already has the data, why do we need a separate "warehouse" copy of it? There are several motivations:

Figure 3. Improving the processes that feed information delivery.

So what is real time decision support? We believe that a RTDSS combines the historical and analytic component of enterprise-level information architecture. It is a framework that includes data warehousing – in a continuous, asynchronous, flow of data – current operational data along with business intelligence that delivers data in near-real time. In other words, data moves straight from the originating source to all uses that do not require some form of staging. This movement takes place soon after the original data is written. Any time delays are due solely to transport latency and (optionally) minuscule processing times to dispatch or transform the instance of data being delivered.

Instead of pulling data in nightly batch loads from the operational systems, the nature of real time decision support demands that data is captured on an ongoing basis from upstream systems “on-demand” or based on events in the business. To that end, we have developed a number of technical approaches to move data through the system – from extract to the business intelligence layer – based on “events” rather than just relying on scheduled processes.

Sourcing Data

Many early data warehouses were built monthly. Years ago, this timeframe was consistent with accounting cycles and feasible for existing technology. After the accounting cycle ended, results were extracted from the business systems using a batch process. In fact, an entire software tools segment — Extract, Transform, and Load (ETL) — has arisen to support batch data integration. As technology has progressed, enabling weekly and daily cycles for data warehousing, the batch load step has remained.

However, the migration from daily to real time requires a different approach. The terms drip feed or trickle feed are used in contrast to batch feed. These terms don't minimize the amount of data that flows, but describe approaches that handle transactions individually as they occur instead of in a batch mode. Real time has typically been made possible through the use of Enterprise Application Integration (EAI) and other middleware tools. These products combine messaging, transformation/routing tools, and adapters to enhance popular commercial software products.

Batch versus trickle feed is not necessarily a dichotomous decision. The distinction between ETL and EAI tools is blurring as vendors adopt features from each others' products. A hybrid solution is likely to make the most sense in many cases. However, the use of any continuous feed has implications for storing, disseminating, and assuring the quality of data.

Of course the next step in the evolution of the batch ETL processes is to move from a daily extract process into one that delivered data throughout the data. But how do we do that and does it make sense? What if the data warehouses were to acquire the same data that flows into and between the transactional systems and that we could act on that data more appropriate to the data warehouse – querying and reporting? These are questions that really can be accomplished with the SAS technology that sits on your servers today. Of course the optimal frequency for a data warehouse refresh depends on a number of factors including the industry, the application, the business process, the time horizon of the business process and the underlying technical infrastructure. In particular, the business process is decisive – if I am analyzing three years worth of sales trends versus introducing a customer intervention to prevent churn while they are on the phone.

The table below was taken from a survey recently completed on how often an organization refreshes its data.

Figure 4. Data Warehouse Refresh Rates.

Storing Data

The data in a data warehouse is there for several reasons, but ultimately it is there to support reports and other types of analyses. The warehouse frequently provides historical context that is not present in operational systems, which is vital for decision support. The warehouse also needs to be structured to support the load process, including the need to cleanse data and harmonize input from multiple systems.

Dimensional data models, or star schemas, are widely used for warehouses because of their advantages for performance and ease of use. Performance is aided by a normalized structure for fact tables, which contain numeric measures. Since fact tables are frequently the largest tables, normalizing these structures optimizes storage space and improves performance. Contextual information for the numeric measures is stored in dimensions, which are typically denormalized to at least some extent. The denormalization of the dimensions simplifies querying and reporting activities by reducing the number of tables that must be joined to ask meaningful business questions.

In a batch loaded warehouse, a new batch of data means many additions to the fact tables and some updates to the dimension tables. There are well-known slowly changing dimension algorithms for capturing how the dimension values change over time. Batch loaded warehouses also make use of their load window to optimize reporting through the creation of pre-computed aggregates and OLAP "cubes."

These storage strategies must be adapted for a real time warehouse. Dimensional data models can be made to work, and dimension change algorithms can be adapted for trickle feeds. However, some optimization techniques like pre-computation and cubes are not feasible without a defined load window. If the business need is simply for near real time data, it may be possible to refresh such structures several times intra-day. However, for true real time other types of optimization are required. True real time normally involves some combination of cached recent results and long-term storage of less recent data in a more traditional warehouse structure.

Disseminating Data

Traditional reporting tools have delivered information in forms such as web-based reports and hard-copy printouts. More interactive forms of data, such as an exported spreadsheet or an OLAP tool are valued for more detailed analysis.

As we increase the timeliness of data in the warehouse, alternative channels for proactive notification become increasingly relevant. Clearly the value of up to the minute inventory data is lost if the visibility to that data is a weekly printed report.

We believe that real time decision support architectures should include a component we call an event system. An event system provides the infrastructure to interpret a particular transaction from the incoming stream of transactions as some kind of event that has meaning in the business process. Consider a fraud detection system for a bank. An ATM withdrawal on its own is not significant, but four withdrawals on the same account within an hour may be cause for concern: an event. Another way to understand events is to make the comparison to exception reports. Exception reports have been used since the early days of "data processing" to let the computer find the data that is out of bounds, incomplete, or interesting in some other way. An event system enables exception reporting to occur in real time as business is conducted.

In addition to detecting events, an event system should provide facilities for notification and storage of the event (with the appropriate contextual information such as source system of record and timestamp). Real time notification can take a variety of forms, from an email to a scrolling ticker on a Dashboard to direct integration into existing operational systems. Going back to the banking example, the most appropriate notification of potential ATM fraud/theft may be to open a ticket in the customer care system (or case management system), allowing the existing service center processes to take over responsibility, contacting the account owner and making sure everything is all right.

Quality Assurance

Data warehousing and decision support projects are about making new uses of data that an organization already has. One reality of this undertaking is that data quality may need to be addressed in order to realize the new purpose for the data. The information on counterparties in a financial system may be perfectly adequate for accounts receivable, but require another level of consistency and detail to be used for reporting on credit exposure. These issues only grow as decision support data is sourced from multiple sources. In a traditional data warehouse, the batch load window provides a window for quality assurance. New batches of data can be staged and cleansed. Quality reports can be run on data while it is still in the staging area. This gives the operational staff for the data warehouse a means for assuring a level of quality of the data on which decisions are based.

A real time warehouse needs a modified approach to quality assurance. The issues are still there, but the techniques must change. The limitations of doing quality checks on the fly for a trickle feed may force more data quality issues to be addressed at the source system.

Note also the reliability aspect of a real time architecture. In a batch loaded warehouse, the decision support system is only dependent on the source system to be available during the scheduled batch extract. Real time architecture demands continuous uptime and reliance on the real time nature of the reporting and business intelligence systems will require much more robust infrastructure such as the services provided by network systems management vendors.

So what’s best for you and your company?

Start with the business process

Achieving real time decision support must begin with the business process. What is the overall business goal? When considering real time, ask yourself: What is the required timeliness of data? Is there benefit to improving timeliness? What is the value of a single version of the “truth” that a batch loaded warehouse provides? Defining the business process and its participants is the necessary foundation.

Define data needs

Once the process is understood, detailed information needs of the participants must be surfaced. What information is missing today? What information could be more easily gathered and used in the context of another system? If data is available in real time, should new channels to disseminate that data be considered?

Locate sources for data

With data needs defined, it is necessary to find sources for that data. Sources should be located and characterized in terms of data quality, consistency with other sources, and timeliness. Ways to access data should be explored. Real time access will probably add complexity to the data access development, and may come with concerns about system resource usage. Issues around access and ownership need to be worked through. If the project team has a clear picture of the data needs and a good characterization of the sources, many unpleasant surprises down the road can be avoided.

Check feasibility

There may be a need to revisit the project goals and tradeoffs between ideal business process and implementation complexity at this point. When the data needs and sources are characterized, technology experts will have a good idea how to build the final solution. By working with the stakeholders at this point, the return on investment can be optimized.

Build it

Other considerations of a real time decision support system:

Summary

Real-time decision support is much more than just the technology building blocks – it is a strategy aimed at solving a business problem that can not be solved by the operational systems or the data warehousing systems alone. In addition, this requires a different architecture to support it. As with any architecture, it is more than just the ETL tool, the database, the BI portal – it is all about an integrated view of how data moves through the organization – it is about identifying the source systems, staging and target databases, data acquisition and integration strategies, network and server capacity requirements, messaging systems, development/QA/production environments, and error and recovery processes.

Crafting the Right Architecture for Real Time Decision Support

Today organizations employ many forms of real time systems – software and hardware systems that process information at a speed natural to the flow of real world processes. In the real time enterprise (or “zero latency” organization), information is processed quickly enough so that decision makers can successfully evaluate and act on events coming from these real time systems. The key components involve an organization’s ability to detect events, evaluate and then respond to them much more quickly than traditional data warehousing strategies have allowed.

By blending operational and strategic systems (data warehousing and analytics), a much richer environment for decision support exists. It is important to understand what types of decisions are good candidates for the real time revolution and which business processes can be morphed into economical processes that are inherent in the real time information architecture. The goal of real time decision support is to facilitate access to information that cuts across multiple applications, systems and data sources. The blending of operational systems that support business-as-usual can combine with historical and analytic results to help provide a superior framework for decision making.

Real time decision support focuses on both the process events important to operations and the business events that support strategic management. By virtue of its label, real time means much more frequent updates of content. We think of the result as getting the most valid and reliable decision-support metrics to the right people, just in time.

We see many values of a real-time data warehouse, including:

We believe that by implementing systems that enable the real time enterprise, organizations can truly provide information-driven decision making, rich in content. Superior decision support is a prerequisite for adaptability and successful organizational change. As an organization, we have experienced tremendous value in helping companies quench their thirst for better, faster data for decision making.

References

Inmon, W. H., Claudia Imhoff and Ryan Sousa. The Corporate Information Factory. Wiley. New York. 2nd

edition. 2002. ISBN: 0-471-39961-2.

Inmon, W.H., Building the Data Warehouse, QED/Wiley, 1991.

Imhoff, Claudia. 2001. "Intelligent Solutions: Oper Marts – An Evolution in the Operational Data Store." DM

Review. Brookfield. 2001.

Kimball, Ralph. The Data Warehouse Toolkit : Practical Techniques for Building Dimensional Data

Warehouses. John Wiley & Sons, February, 1996.

McDonald, Kevin. Wilmsmeier, Andreas. Dixon, David C. and Inmon, W. H. Mastering the SAP Business

Information Warehouse. New York. 1st edition. 2002. ISBN: 0-471- 21971-1.

Raden, Neil. Exploring the Business Imperative of Real-Time Analytics. Hired Brains, Inc. October, 2003.

About the Authors

Greg Barnes Nelson, President and CEO ThotWave Technologies,

started ThotWave to support the industry’s thirst for better, faster and cheaper data

paths... improving time to decision. Prior to ThotWave, Mr. Barnes Nelson spent

several years in consulting, media and marketing research, database marketing and

large systems support. Mr. Barnes Nelson holds a B.A. in Psychology and PhD level

work in Social Psychology and Quantitative Methods. He has more than 18 years in enterprise software development. For more information call 800-584-2819 or contact

greg@thotwave.com .

Jeff Wright, Chief Technology Officer at ThotWave, has over 15 years of diverse experience in the information

technology field. Jeff has led and participated in the implementation of numerous

successful projects in subject areas such as Energy Risk Management, eCommerce,

Customer Relationship Management, Telecommunications, and Manufacturing. His

technology specialties include Java, object-oriented development, web development,

relational databases, and SAS. This experience gives him the insight to dig into client

issues, consult and build the perfect solution for each individual case. Jeff's passion is

building elegant technical solutions that are able to last for the future while at the same

time delivering immediate business value.

Citation

Nelson, G. and J. Wright, "Real Time Decision Support: Creating a Flexible Architecture for Real Time Analytics," DSSResources.COM, 11/18/2005.

Greg Nelson provided permission to archive and feature this specific article at DSSResources.COM on Monday, August 1, 2005. This article was posted at DSSResources.COM on Friday, November 18, 2005.